Sports Data Collection Products: The engine behind sports data as a service (DaaS)

Data Collection Product Management

What exactly does a product manager working on a sports data collection product do?

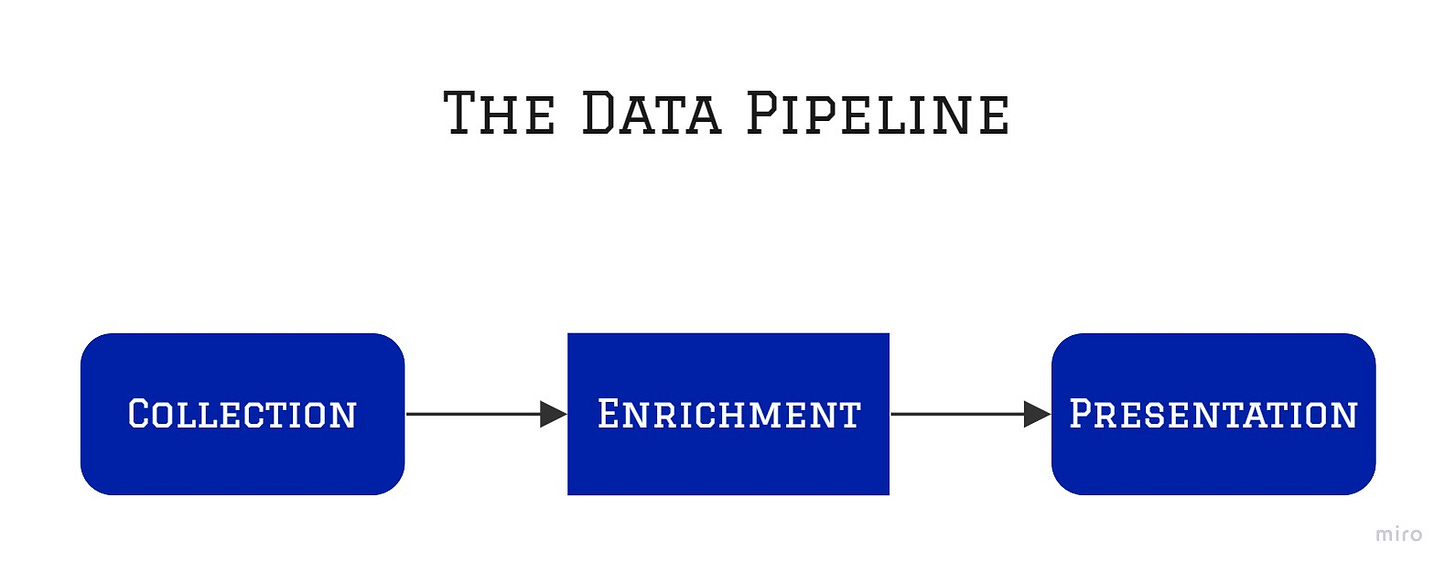

Data collection software facilitates the collection of data from sports matches. I am currently focused on the data collection experience of American football games. Now, this data could be consumed raw just like steak tartare but there aren’t a whole lot of customers who have that palette. So this data ends up going through an enrichment process and is transformed into a product that customers (e.g. a data analyst or a coach) are more familiar with: understandable metrics visualized in delightful dashboards with powerful filters.

If you love football as I do then this sounds like the dream, doesn't it? Well, I'm not gonna lie, it's great to work in an industry I love. But it's a double-edged sword. Every time I watch a game and some chaotic play happens I question whether the software will break. I can't let myself just be a fan. But, it's a small price to pay.

What does data collection software do?

So when I say the software collects data from American football games, what do I mean exactly? In simple terms, the software enables the collection of:

who did what, when, where, and to certain degrees, how on events related to the ball (we can call this event data)

where every player was on the field at any moment during a play (we can call this tracking data)

Event data is the building block for understanding tendencies and performance. This applies to teams and players. Tracking data adds more context to performance analysis.

Adding value to the data supply chain

What are the biggest challenges in managing this product?

Let me frame this using Marty Cagan's 4 product risks:

value risk (whether customers will buy it or users will choose to use it)

usability risk (whether users can figure out how to use it)

feasibility risk (whether our engineers can build what we need with the time, skills, and technology we have)

business viability risk (whether this solution also works for the various aspects of our business)

For me, I need to think about value risk for the end customer and the internal user. My internal user is a data collector using the collection software.

For external customers, my role in adding value is indirect. If I can make the job of the data collectors easier, then the product will be better for external customers. How? I need to:

save data collectors time so they can collect more data

reduce or eliminate cognitive load so they can make fewer errors

facilitate an easy way to check data quality

In solution discovery, I tackle the other three risks with my product trio. The product trio includes me, the designer, and the tech lead. In evaluating solutions we want to answer questions like:

Can we build this given our current resources and constraints?

Is it viable in the long term? Can we use it for other sports (abstract problem-solving)?

Is it usable? (Prototyping)

We have to design this for users assuming they know nothing of the sport when they first start.

The tricky balance

How do I know if we are succeeding?

I track two all-important KPIs. They, in a sense, are both North Star Metrics.

Average collection time per game

Number of errors per game

I also have lower-level KPIs to track the speed and quality of individual tasks.

Optimizing for speed can sometimes have an adverse impact on the quality of the data. Optimizing for data quality can sometimes force the data collector to slow down. And there is the rub!

How do we ensure we meet the desired quality for our customers while getting them their data on time?

Going back to how I add value, the first bullet point says:

save data collectors time so they can collect more data

In an ideal world, I could replace the data collector with AI and transform their job to be a data reviewer. But that needs more R&D to improve what the AI can do.

So I need to engage in continuous discovery to keep up to date with the latest developments in AI. At the same time, I need to engage in continuous discovery to know what problems face my users today.

When we can take a big swing, we should! We cannot A/B test our way to big impact all the time. That said, I try to work in smaller experiments so I can learn faster if we're on the right track.

Data collection for sports has a lot of fun and weird problems to solve. But the main takeaway here is that problems in the DaaS world can be abstracted out and worked through like other products. By being customer-centric, employing continuous discovery, and working through experiments we can address and minimize the big risks that lead to catastrophic failure.